A Practical Guide to MLOps: From Development to Deployment

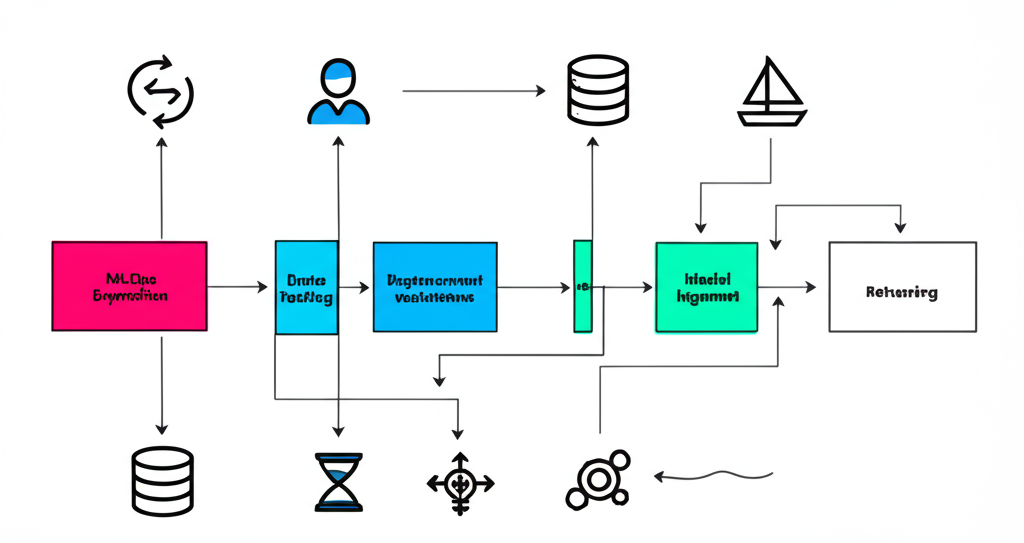

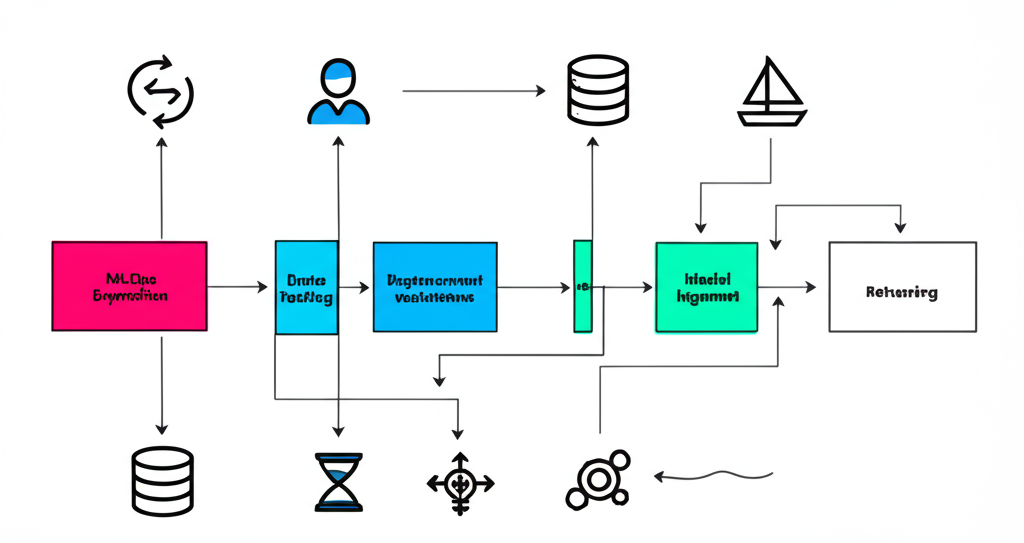

Machine Learning Operations (MLOps) is the practice of efficiently developing, testing, and deploying machine learning models in production environments. This guide will walk you through the key components of MLOps and how to implement them in your organization.

What is MLOps?

MLOps combines machine learning, DevOps, and data engineering to streamline the machine learning lifecycle. It addresses the unique challenges of deploying ML models in production, such as reproducibility, versioning, monitoring, and governance.

Key Components of MLOps

1. Version Control

Version control is essential for tracking changes to code, data, and models. This ensures reproducibility and collaboration.

Example using DVC (Data Version Control) for data and model versioning

import os

Initialize DVC

os.system("dvc init")

Add data to DVC

os.system("dvc add data/training_data.csv")

Add model to DVC

os.system("dvc add models/trained_model.pkl")

Commit changes to Git

os.system("git add .")

os.system("git commit -m 'Add training data and model'")

Push to remote storage

os.system("dvc push")

2. Continuous Integration and Continuous Deployment (CI/CD)

CI/CD pipelines automate the testing and deployment of ML models, ensuring that only high-quality models make it to production.

Example GitHub Actions workflow for ML model CI/CD

name: ML Model CI/CD

on:

push:

branches: [ main ]

pull_request:

branches: [ main ]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: '3.8'

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

- name: Run tests

run: |

pytest tests/

train:

needs: test

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: '3.8'

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

- name: Train model

run: |

python train.py

- name: Upload model artifact

uses: actions/upload-artifact@v2

with:

name: model

path: models/trained_model.pkl

deploy:

needs: train

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Download model artifact

uses: actions/download-artifact@v2

with:

name: model

path: models/

- name: Deploy to production

run: |

# Deploy model to production environment

python deploy.py

3. Model Registry

A model registry stores and manages ML models, making it easy to track different versions and deploy them to various environments.

Example using MLflow for model registry

import mlflow

from mlflow.tracking import MlflowClient

Set MLflow tracking URI

mlflow.set_tracking_uri("http://localhost:5000")

Create or get experiment

experiment_name = "fraud_detection"

experiment = mlflow.get_experiment_by_name(experiment_name)

if experiment is None:

experiment_id = mlflow.create_experiment(experiment_name)

else:

experiment_id = experiment.experiment_id

Start a run

with mlflow.start_run(experiment_id=experiment_id) as run:

# Log parameters

mlflow.log_param("learning_rate", 0.01)

mlflow.log_param("batch_size", 64)

# Train model

model = train_model(learning_rate=0.01, batch_size=64)

# Log metrics

mlflow.log_metric("accuracy", 0.92)

mlflow.log_metric("f1_score", 0.89)

# Log model

mlflow.sklearn.log_model(model, "model")

# Register model

model_uri = f"runs:/{run.info.run_id}/model"

mv = mlflow.register_model(model_uri, "fraud_detection_model")

print(f"Model registered: {mv.name} version {mv.version}")

4. Feature Store

A feature store centralizes the storage, management, and serving of features for machine learning models.

Example using Feast feature store

from feast import FeatureStore

import pandas as pd

Initialize feature store

store = FeatureStore(repo_path=".")

Get training data

training_df = pd.DataFrame({

"customer_id": [1, 2, 3, 4],

"event_timestamp": pd.to_datetime([

"2021-04-01", "2021-04-02", "2021-04-03", "2021-04-04"

])

})

Retrieve features from feature store

feature_vector = store.get_historical_features(

entity_df=training_df,

features=[

"customer_features:age",

"customer_features:income",

"transaction_features:transaction_count_7d",

"transaction_features:average_transaction_amount_30d"

]

).to_df()

Use feature vector for training

X = feature_vector.drop(["customer_id", "event_timestamp"], axis=1)

y = get_labels(training_df) # Get labels from somewhere

model = train_model(X, y)

5. Model Monitoring

Monitoring ML models in production is crucial for detecting performance degradation, data drift, and other issues.

Example using Evidently for model monitoring

import pandas as pd

from evidently.dashboard import Dashboard

from evidently.tabs import DataDriftTab, CatTargetDriftTab

Load reference and current data

reference_data = pd.read_csv("data/reference.csv")

current_data = pd.read_csv("data/current.csv")

Create monitoring dashboard

dashboard = Dashboard(tabs=[DataDriftTab, CatTargetDriftTab])

dashboard.calculate(reference_data, current_data, column_mapping=None)

Save dashboard

dashboard.save("monitoring_report.html")

Set up alerts for drift detection

if dashboard.get_drift_metrics()["data_drift"]["share_of_drifted_features"] > 0.3:

send_alert("High data drift detected!")

6. Model Serving

Serving ML models efficiently is key to providing low-latency predictions in production.

Example using FastAPI for model serving

from fastapi import FastAPI

import joblib

import numpy as np

from pydantic import BaseModel

app = FastAPI()

Load model

model = joblib.load("models/trained_model.pkl")

Define request body

class PredictionRequest(BaseModel):

features: list

Define prediction endpoint

@app.post("/predict")

def predict(request: PredictionRequest):

features = np.array(request.features).reshape(1, -1)

prediction = model.predict(features)[0]

probability = model.predict_proba(features)[0].max()

return {

"prediction": int(prediction),

"probability": float(probability)

}

Implementing MLOps in Your Organization

Step 1: Assess Your Current State

Before implementing MLOps, assess your organization's current ML workflow:

- How are models currently developed and deployed?

- What are the pain points in the current process?

- What tools and technologies are already in use?

Step 2: Define Your MLOps Strategy

Based on your assessment, define an MLOps strategy that addresses your specific needs:

- Which MLOps components are most critical for your organization?

- What tools and technologies will you use?

- How will you measure success?

Step 3: Start Small and Iterate

Don't try to implement everything at once. Start with a small project and gradually expand:

Step 4: Build a Culture of Collaboration

MLOps requires collaboration between data scientists, ML engineers, DevOps engineers, and other stakeholders:

- Foster communication and knowledge sharing

- Define clear roles and responsibilities

- Provide training and resources

Common MLOps Challenges and Solutions

Challenge 1: Data Quality and Governance

Solution: Implement data validation, versioning, and lineage tracking. Use tools like Great Expectations for data validation and DVC for versioning.

Challenge 2: Model Reproducibility

Solution: Use deterministic training pipelines, version control for code and data, and containerization to ensure reproducibility.

Challenge 3: Model Deployment Delays

Solution: Automate the deployment process with CI/CD pipelines and standardize model packaging formats (e.g., ONNX, TensorFlow SavedModel).

Challenge 4: Model Performance Degradation

Solution: Implement comprehensive monitoring for data drift, concept drift, and model performance. Set up automated retraining when performance drops below thresholds.

Conclusion

MLOps is essential for organizations looking to derive real value from their machine learning initiatives. By implementing the key components of MLOps—version control, CI/CD, model registry, feature store, monitoring, and serving—you can streamline the ML lifecycle and ensure that your models perform reliably in production.

Remember that MLOps is not just about tools and technologies; it's also about people and processes. Building a culture of collaboration and continuous improvement is just as important as implementing the right technical solutions.

Start small, iterate, and gradually build a robust MLOps practice that meets your organization's specific needs.