Demystifying Transformer Architecture for NLP Beginners

The transformer architecture has revolutionized natural language processing since its introduction in the paper "Attention Is All You Need" by Vaswani et al. in 2017. This architecture has become the foundation for state-of-the-art models like BERT, GPT, and T5.

What is a Transformer?

At its core, the transformer is a neural network architecture designed to handle sequential data, particularly text. Unlike its predecessors (RNNs and LSTMs), transformers process entire sequences simultaneously rather than one element at a time, making them highly parallelizable and efficient.

Key Components

1. Self-Attention Mechanism

The self-attention mechanism is the heart of the transformer architecture. It allows the model to weigh the importance of different words in a sentence when processing a specific word. This is crucial for understanding context and resolving ambiguities.

def self_attention(query, key, value):

# Scaled dot-product attention

scores = torch.matmul(query, key.transpose(-2, -1)) / math.sqrt(d_k)

attention_weights = F.softmax(scores, dim=-1)

return torch.matmul(attention_weights, value), attention_weights

2. Multi-Head Attention

Multi-head attention extends self-attention by running multiple attention operations in parallel. This allows the model to focus on different aspects of the input simultaneously.

3. Position-wise Feed-Forward Networks

After the attention layer, each position goes through a feed-forward network independently. This consists of two linear transformations with a ReLU activation in between.

4. Positional Encoding

Since transformers process all words simultaneously, they need a way to understand word order. Positional encodings add information about the position of each word in the sequence.

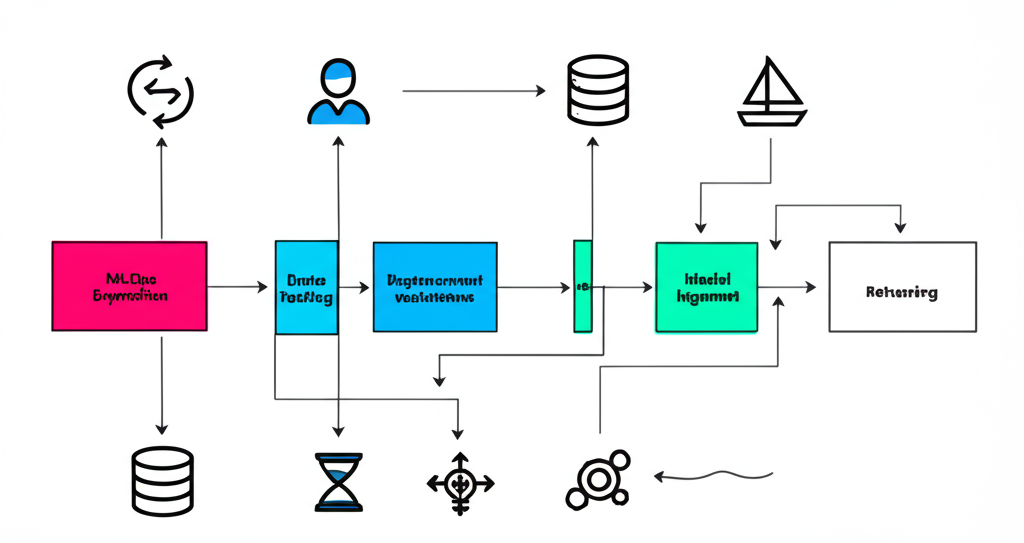

Transformer Architecture

The complete transformer architecture consists of an encoder and a decoder, each containing multiple layers of self-attention and feed-forward networks.

Encoder

The encoder processes the input sequence and generates representations that capture the meaning of each word in context.

Decoder

The decoder generates the output sequence one token at a time, using both the encoder's output and its own previous outputs.

Applications in NLP

Transformers have enabled significant advances in various NLP tasks:

Implementing a Simple Transformer

Here's a simplified implementation of a transformer encoder layer using PyTorch:

import torch

import torch.nn as nn

class TransformerEncoderLayer(nn.Module):

def __init__(self, d_model, nhead, dim_feedforward=2048, dropout=0.1):

super().__init__()

self.self_attn = nn.MultiheadAttention(d_model, nhead, dropout=dropout)

self.linear1 = nn.Linear(d_model, dim_feedforward)

self.dropout = nn.Dropout(dropout)

self.linear2 = nn.Linear(dim_feedforward, d_model)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.dropout1 = nn.Dropout(dropout)

self.dropout2 = nn.Dropout(dropout)

self.activation = nn.ReLU()

def forward(self, src, src_mask=None, src_key_padding_mask=None):

src2 = self.norm1(src)

src2, _ = self.self_attn(src2, src2, src2, attn_mask=src_mask,

key_padding_mask=src_key_padding_mask)

src = src + self.dropout1(src2)

src2 = self.norm2(src)

src2 = self.linear2(self.dropout(self.activation(self.linear1(src2))))

src = src + self.dropout2(src2)

return src

Conclusion

The transformer architecture has transformed the field of NLP by enabling more efficient and effective processing of text data. Understanding its components and mechanics is essential for anyone working with modern NLP models.

As you continue your journey in NLP, experiment with pre-trained transformer models like BERT and GPT to see their capabilities firsthand. The concepts may seem complex at first, but with practice, you'll gain intuition for how these powerful models work.